“Deepfake” technology utilises artificial intelligence, typically to swap out one person’s face for another. Sounds innocent enough, right? While it has obvious uses when it comes to creating comedy, meme and advertising content, is the technology headed somewhere more sinister?

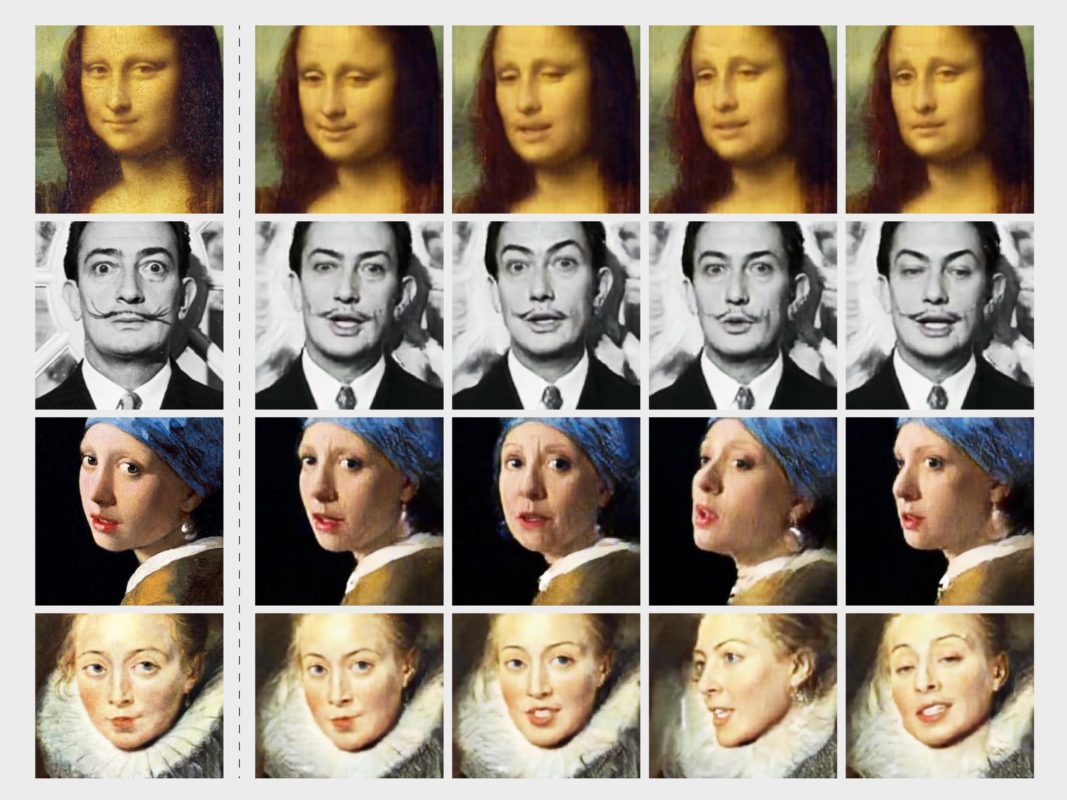

Deepfakes, a combination of “deep learning” and “fake”, are digitally-forged videos created through an image synthesis technique. The technology can seamlessly make it look like someone is doing or saying something that they are not. It can also generate completely fake human faces from a pool of reference pictures.

This week, the House of Representatives intelligence committee in the US, asked social media giants Google, Twitter and Facebook how they were going to approach the issue of deepfakes in the lead up to the 2020 US election. Where Fake News was the buzz-phrase of the previous election, it seems that Deepfake could be the next.

Concerningly, the term first entered the public consciousness when it was reported that a Reddit user was using the technology to place female celebrities’ faces onto porn videos. This has massive implications in regards to consent, blackmail, and revenge porn; and not just for those in the public eye.

Face-swapping

In January 2018, a deepfake desktop application called FakeApp launched. This app gives users the ability to easily create manipulated deepfake images and videos at home. Deepfakes that swap the faces of one person with another are on the more harmless side of this technology, as they are relatively easy to spot. At least for now.

Much of this variety of deepfake reads as similar to snapchat filters, where a user can swap faces with their friend or a picture of a celebrity. The results are comical and easy to identify.

The technology is getting more and more accessible, however, with deepfake YouTube channels springing up left and right. As the technology becomes more sophisticated, it will become harder to know if what we are seeing is real.

The Spectre Project

Facebook’s current position on faked videos is not to remove them, but rather to reduce their reach and searchability.

Artists Bill Posters and Daniel Howe recently partnered with company Canny AI to create digitally-generated videos in which famous faces spoke about the mysterious “Spectre”. This turned about to be a hype campaign for an exhibition the artists were organising.

The results provide an insight into where the technology is at right now, and where it has the potential to lead.

CEO of San Diego startup Truepic is developing image-verification technology to combat the rise of deepfakes and had this to say:

“While synthetically generated videos are still easily detectable by most humans, that window is closing rapidly. I’d predict we see visually undetectable deepfakes in less than 12 months,”

“Society is going to start distrusting every piece of content they see.”

Fake News

Earlier this year, President Trump tweeted a doctored video of Nacy Pelosi designed to make her appear to be slurring her speech. The ability of platforms to deal with fake videos of this nature is lacking, with only YouTube removing the video.

“PELOSI STAMMERS THROUGH NEWS CONFERENCE” pic.twitter.com/1OyCyqRTuk

— Donald J. Trump (@realDonaldTrump) May 24, 2019

While this video is not a deepfake per se, rather a video with manipulated audio, it says much about where we’re headed. When the most powerful man in America sees no problem in using this fraudulent tech to his advantage, how will we police the use of this AI as it becomes more ubiquitous?

The debate over deepfakes is a tiny fraction of the greater issues surrounding ethics in our technological march forwards.

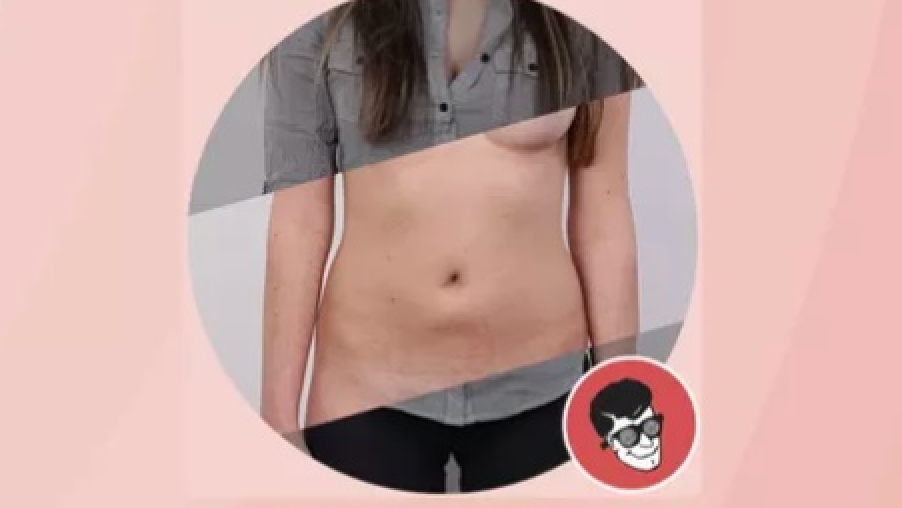

DeepNude

This year, a computer programmer created an app that uses neural networls to “remove” clothing from images of women, manipulating bodies to appear nude.

The app edits a photo of a clothed woman to created a naked version of that image. The most potentially devastating uses of deepfakes lie in how women or other marginalised groups will be targeted.

CEO of revenge porn activism organisation Badass, Katelyn Bowden, said,

“This is absolutely terrifying,”

“Now anyone could find themselves a victim of revenge porn, without ever having taken a nude photo. This tech should not be available to the public.”

Deepfuture

We’re at the tipping point of this technology. As it stands now, it is relatively easy to discern what is real and what is not. But we’re just at the beginning. Its going to become more and more difficult to tell if what we’re looking at is an authentic image or video.

On the flip side, we’re entering a world where public figures may be able to cast off accountability by claiming that a video of them was actually digitally-created.

Deepfake-detecting technology is stuck playing catch-up, but it looks like the odds are stacked against it, Professor Hany Farid of UC Berkeley stating,

“We are outgunned… the number of people working on the video-synthesis side as opposed to the detector side is 100 to 1.”

Tech companies have responsibilities to the public in creating programs and software that improve our lives, rather than technology that calls our very perception of reality into question.

The way we behave and perceive things online is about to change, whether we like it or not.

Subscribe to FIB’s newsletter for your weekly dose of music, fashion and pop culture news!