This next in the series of thoughts from DESIRE GROUPE founder Paul G Roberts looks at the many shouts of experts calling for urgent regulation of AI.

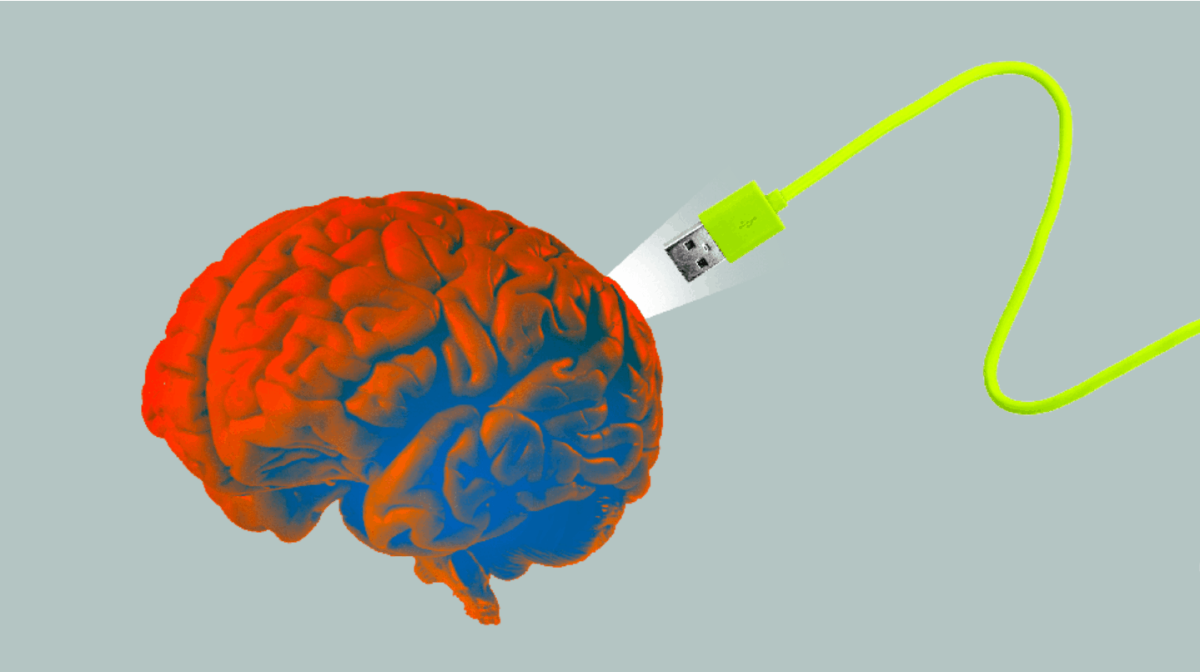

The cries for AI regulation keep growing louder, as yet another plea is made by AI developers to regulate the technology. Recently Sam Altman, Geoffrey Hinton, and a cast of thousands have issued yet another open letter , interpreted in the mainstream media as AI posing a “risk of extinction” to humanity on the scale of nuclear war or pandemics. Mitigating that risk should be a “global priority,” in their view.

This view supposes that the human species currently dominates other species because the human brain has some distinctive capabilities that other animals lack. If AI surpasses humanity in general intelligence and becomes superintelligent, then it could become difficult or impossible for humans to control. Just as the fate of many endangered species depends on human goodwill, so might the fate of humanity depend on the actions of a future machine superintelligence.

Yet is it feasible for regulations to be created and adopted to mitigate these risks? And what may be their consequences? Regulation in and of itself is far from a risk-free process. After all, it isn’t clear that regulations adopted to mitigate the risks associated with a coronavirus-based epidemic have not been equally, if not more harmful overall to society than the risks they sought to address.

While lockdowns may have pushed back the time at which individuals contracted COVID-19 to reduce the spread of infection, the economic consequences of high inflation, educational consequences of lost schooling, and social (even neural) consequences of disrupted face-to-face human engagement pose existential threats to a significant subset of humanity, and impose lifelong limitations on many, many more.

Isaac Asimov’s Three Laws of Robotics are a set of rules designed to govern the behaviour of robots in his science fiction stories.

The laws are:

1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given it by human beings, except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Sounds pretty simple right?

While these laws have been influential in popular culture, they are not a practical framework for regulating AI in the real world. This is because AI is not a single entity or technology, but rather a collection of different technologies and systems, each with their own unique capabilities and limitations.

Furthermore, the laws are based on the assumption that robots are autonomous and capable of making decisions on their own, which is not necessarily the case for most AI systems today. Instead, most AI systems are designed to operate within predetermined parameters and are not capable of making independent decisions.

In addition, the laws do not address many of the real-world issues associated with AI, such as bias, privacy, and security. While the laws may be a useful starting point for thinking about the ethical and moral implications of AI, they are not a practical framework for regulating the technology.

That being said, the ideas behind Asimov’s laws – namely, that AI should be designed to prioritise the safety and well-being of humans – are certainly relevant to the discussion of AI regulation. As policymakers grapple with the challenges of governing this rapidly evolving technology, it will be important to consider how to ensure that AI is designed and used in ways that are safe, ethical, and beneficial for society as a whole.

While the call to regulate AI has been growing louder in recent years, there are those who argue that such regulation may not be feasible, or even desirable. In this essay, we will examine the arguments for and against AI regulation, and explore the challenges that policymakers will face in trying to govern a technology that is rapidly evolving and increasingly integrated into our lives.

The Case for AI Regulation

The case for AI regulation is rooted in concerns about the potential risks and unintended consequences of AI development. As AI systems become more powerful and pervasive, there are fears that they could be used to undermine democracy, perpetuate social inequality, or even pose an existential threat to humanity. For example, some worry that AI could be used to create autonomous weapons that could make decisions about who to kill without human oversight, or that it could be used to manipulate public opinion or perpetuate discrimination.

Proponents of AI regulation argue that without proper safeguards in place, these risks will only increase as AI becomes more advanced. They point to the fact that many AI systems are already being used in ways that are potentially harmful, such as facial recognition technology that has been shown to be biased against people of color and women, or predictive policing systems that may reinforce existing biases in the criminal justice system.

In addition, supporters of AI regulation argue that the technology is too complex and too powerful to be left entirely in the hands of private companies and individual developers. They point to the fact that many of the most powerful AI systems are being developed by a handful of large tech companies, and that these companies may not have the incentives or the expertise to ensure that their systems are safe and beneficial for society as a whole.

The Case Against AI Regulation

Despite these concerns, there are those who argue that AI regulation may not be feasible, or even desirable. One of the main arguments against AI regulation is that it may be difficult or impossible to define what constitutes “good” AI versus “bad” AI. Unlike other technologies, such as nuclear weapons or genetically modified organisms, AI systems are not inherently dangerous or harmful. Rather, it is the way that they are used that determines their impact on society.

As a result, some argue that trying to regulate AI is akin to trying to regulate “innovation” or “creativity” – it is simply too broad and too vague a concept to be effectively regulated. Others point to the fact that AI is a rapidly evolving technology, and that any regulations put in place now may quickly become outdated as the technology continues to advance.

Another argument against AI regulation is that it may stifle innovation and discourage investment in the technology. If companies are forced to comply with a complex and ever-changing set of regulations, they may be less likely to invest in AI research and development, or to bring new AI products to market. This could ultimately slow down the pace of technological progress and limit the potential benefits of AI for society.

Finally, some argue that AI regulation may be unnecessary, as there are already existing legal frameworks in place that can be used to address many of the concerns raised by AI development. For example, existing laws against discrimination, privacy violations, and other forms of harm could be applied to AI systems just as they are applied to other technologies.

The Challenges of AI Regulation

Despite these arguments for and against AI regulation, it is clear that there are significant challenges that policymakers will face in trying to govern this rapidly evolving technology. One of the biggest challenges is simply keeping up with the pace of technological change. As AI developments continue to accelerate, policymakers will need to constantly update their regulatory frameworks to ensure that they remain relevant and effective.

Another challenge is the global nature of AI development. Unlike other technologies that can be regulated at the national level, AI is a global industry that is not bound by national borders. This means that any regulations put in place by one country may be circumvented by companies operating in other countries with more permissive regulatory environments.

In addition, there is the challenge of balancing the potential risks and benefits of AI. While there are certainly risks associated with the development of AI, there are also many potential benefits, such as improved healthcare, more efficient transportation systems, and more personalized education. Policymakers will need to carefully weigh these factors when designing regulations, to ensure that they do not inadvertently stifle innovation or limit the potential benefits of AI for society.

Finally, there is the challenge of ensuring that any regulations put in place are enforceable. Unlike other technologies that can be physically controlled or restricted, AI is a software-based technology that can be easily replicated and distributed. This means that any regulations put in place will need to be designed in such a way that they can be effectively enforced, without stifling innovation or imposing undue burdens on companies and individuals.

Conclusion

In conclusion, the question of whether AI regulation is feasible is a complex and multifaceted one. While there are certainly risks associated with the development of AI, there are also many potential benefits, and any regulation put in place will need to carefully balance these factors. At the same time, there are significant challenges that policymakers will face in trying to govern this rapidly evolving technology, including keeping up with the pace of technological change, addressing the global nature of AI development, and ensuring that any regulations put in place are enforceable.

Ultimately, the question of whether AI regulation is feasible will depend on a number of factors, including the pace of technological change, the global regulatory environment, and the ability of policymakers to design effective and enforceable regulations. As AI continues to evolve and become more integrated into our lives, it will be important to continue this conversation and to explore new ways of governing this powerful technology.

Subscribe to FIB’s Weekly Breaking News Report for your weekly dose of music, fashion and pop culture news!