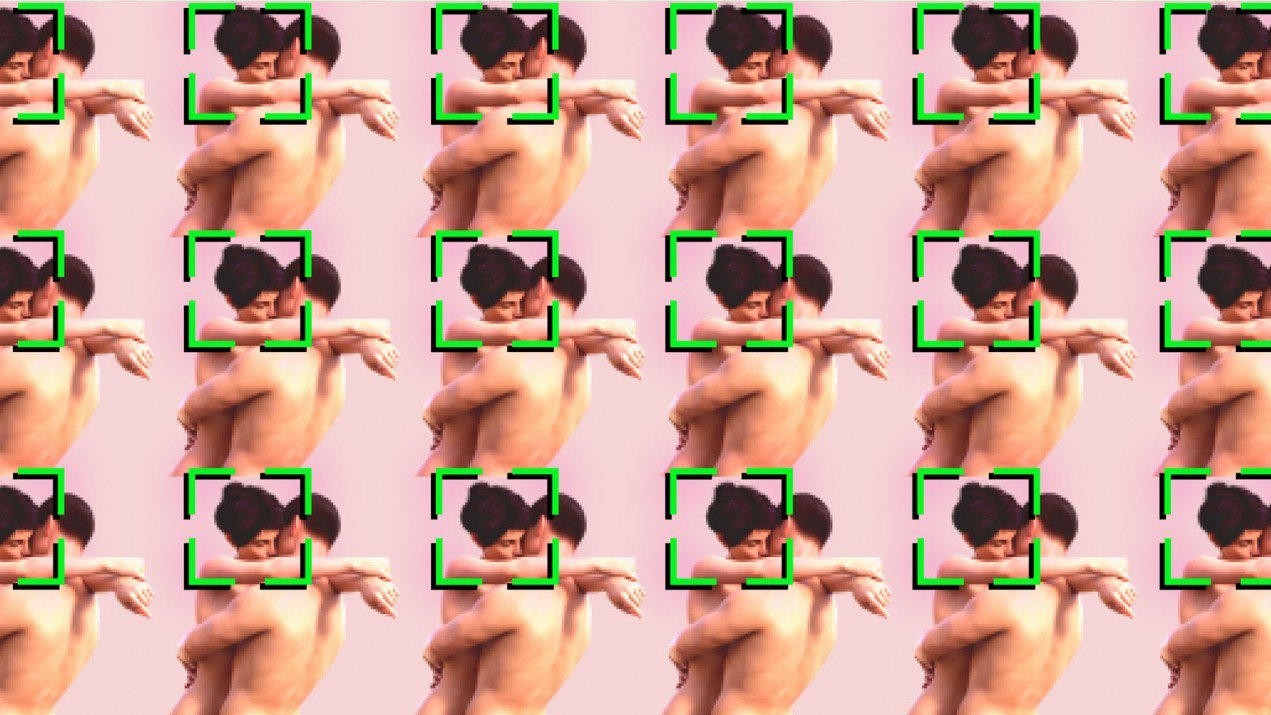

Deepfakes are on the rise. A new report by Deeptrace Labs reveals that non-consensual deepfake pornography accounts for 96% of total deepfakes online. It’s no surprise that women are the most at risk.

Video is no longer a reliable record. Deepfake technology is growing, and it’s becoming lucrative.

The term first entered our collective consciousness in 2017 when a Reddit user used tech to put the faces of female celebrities into pornographic videos. Needless to say, this is very concerning.

Just two years later, the technology has proliferated. A new report by Deeptrace Labs, a cybersecurity company that detects and monitors deepfake technology, has confirmed our fears. The report shares independently-sourced data and insights about the real-world impact of deepfakes. And the results are sobering.

Cyberbullying on Steroids

Deeptrace Labs revealed that the phenomenon of deepfakes is growing rapidly, the number almost doubling over the last nine months from 7,964 to 14,678. A key trend lies in just how prominent deepfake pornography is becoming, which is by its very nature non-consensual.

The report revealed that a shocking 96 per cent of total deepfake videos is non-consensual deepfake porn. Most of this features female celebrities, their likenesses edited into pornographic videos. On the top four deepfake porn hosting websites, 100 per cent of the subjects are women. Worse of all, these websites have received 134 million views, and counting.

Deeptrace found that 99 per cent of the subjects featured in deepfake pornography videos were actresses and musicians working in the entertainment sector. American and British actresses are the most featured, followed by South Korean K-Pop musicians.

On top of the risks for women in the public eye, the technology also gives users the capability to create realistic revenge porn of a former partner, or anyone whose life they want to ruin. The risks of cyber-bulling with the use of this technology are extremely high.

The study quoted professor and author Danielle Citron:

“Deepfake technology is being weaponised against women by inserting their faces into porn. It is terrifying, embarrassing, demeaning, and silencing. Deepfake sex videos say to individuals that their bodies are not their own, and can make it difficult to stay online, get or keep a job, and feel safe.”

The report also found that 802 deepfake videos were hosted on mainstream pornography websites. This means that viewers have no way of knowing that they’re watching edited footage.

Weaponised Against Women

There are many ways in which women’s identities and bodies are being violated online. Apps like DeepNude give users the technology to “remove” the clothing on a photo of a woman. The app then uses image translation algorithms to falsely generate naked parts of the body that were covered in the original image.

The app was specifically made for use on images of cis women. It doesn’t work on men. While the very concept of this app is disgusting enough, the resulting images can have real and disastrous effects on the lives of victims.

Despite the website being taken down by its creators following a much-deserved backlash, the software still exists online and is impossible to remove from circulation. This technology is frighteningly easy to access.

A Current Threat

Much of the media coverage about deepfakes up until this point has been about the potential for the technology to influence elections. But the true harm lies in the effect that it is already having on women.

Head of research at Deeptrace, Henry Ajder told the BBC,

“The debate is all about the politics or fraud and a near-term threat, but a lot of people are forgetting that deepfake pornography is a very real, very current phenomenon that is harming a lot of women.”

The threats posed by deepfakes are no longer just theoretical. Deepfake pornography is now a global phenomenon supported by significant viewership, with women exclusively targeted. There is no current data on how this technology effects the lives of transgender people, a group that is disproportionately sexually targeted online.

Now that the software exists, it is incredibly hard to stop these videos emerging. And the phenomenon is spreading far beyond the realm of the rich and famous.

Founder of Deeptrace, Giorgio Patrini, stated upon the release of the report:

“Every digital communication channel our society is built upon, whether that be audio, video, or even text, is at risk of being subverted.”

Subscribe to FIB’s newsletter for your weekly dose of music, fashion and pop culture news!